One in 25 children experience sexual solicitation online in which the offender asks to meet with them in the physical world. For law enforcement agencies, investigating crimes of cyberdeviance is a complicated task due to the overwhelming quantity of reports and the difficulty of parsing natural language, according to two professors in Purdue Polytechnic’s Department of Computer and Information Technology.

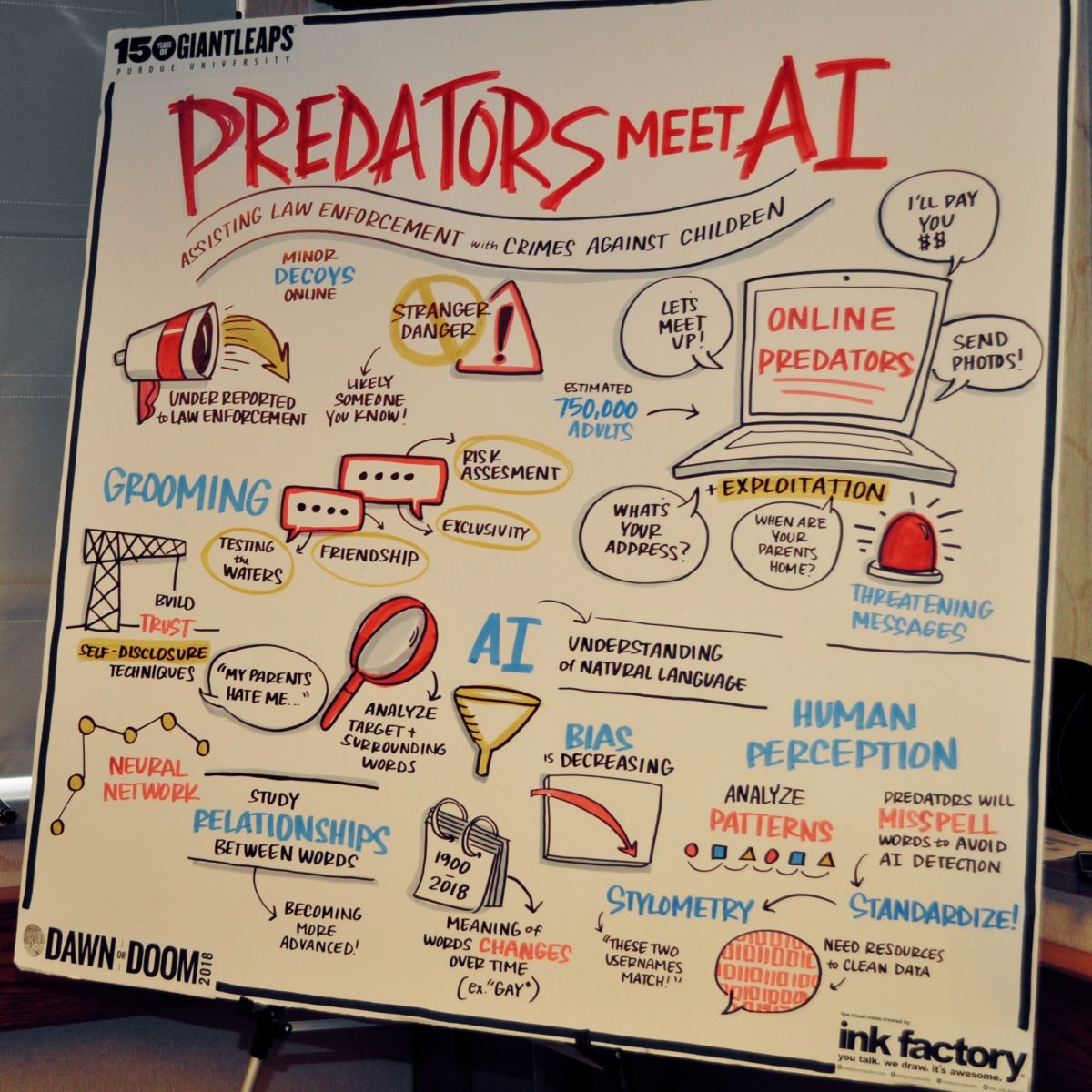

Julia Rayz, associate professor, and Kathryn Seigfried-Spellar, assistant professor, discussed the challenges in a presentation titled “Predators Meet AI: Assisting Law Enforcement with Crimes Against Children” at Purdue’s 2018 Dawn or Doom conference.

The National Center for Missing & Exploited Children’s CyberTipline received 10.2 million reports of child sexual exploitation in 2017 alone, Rayz and Seigfried-Spellar said. Chats between minors and offenders can last for weeks or even months, leading to thousands of lines of text that law enforcement must process quickly, for multiple victims, and on multiple messaging platforms.

The National Center for Missing & Exploited Children’s CyberTipline received 10.2 million reports of child sexual exploitation in 2017 alone, Rayz and Seigfried-Spellar said. Chats between minors and offenders can last for weeks or even months, leading to thousands of lines of text that law enforcement must process quickly, for multiple victims, and on multiple messaging platforms.

Unlike humans, machines can process an enormous amount of data quickly. But the mindset of an Internet predator is difficult for machines to determine.

“Normal fantasies in the real world are things people have thought of but would never go through with,” said Seigfried-Spellar. “How do we identify the cases that law enforcement should go after?”

Natural language processing methodologies might help law enforcement with prioritizing cases involving crimes against children.

“If you build a nice enough representation of the words, somewhere between these multidimensional vectors, something has to happen between these words,” said Rayz. “The relationship has to be captured somehow so that we can test them via technology.”

Shifts in the meaning of words that happen over time and/or due to context complicate the matter of helping create artificial intelligence (AI) capable of processing text found online.

“To make a pattern out of all the words is nearly impossible unless you have some background information,” Rayz said. “With enough data, computers will be able to understand approximately what these words mean. Studying this has been going on since long before computers were involved.”

Video replay

Additional information:

- Dawn or Doom

- Chat Analysis Triage Tool (CATT) uses AI to catch sex offenders

- Getting artificial intelligence to recognize humor is no laughing matter

- CIT professor researches how fighting cyberdeviance affects police officers

- Future workforce to benefit from robots, smart materials, says Voyles

- Matson: Malicious use of autonomous technology threatens public safety