Speaking to machines has become routine. For example, you might have recently said something like: “Hey, Siri, set a timer for 15 minutes,” or “OK, Google, show me directions to the airport.”

Interacting with these home assistants by voice is especially helpful to anyone with a physical limitation, be it permanent, such as paralysis, or temporary, such as holding a baby who has finally fallen asleep. But what if someone has difficulty speaking because of illness or injury? Or what if a noisy manufacturing environment makes it impossible to hear and understand voice commands? How can humans communicate with machines without using their voices? Xiumin Diao, assistant professor of engineering technology, is teaching a robot to know what a human wants without that person saying anything.

Interacting with these home assistants by voice is especially helpful to anyone with a physical limitation, be it permanent, such as paralysis, or temporary, such as holding a baby who has finally fallen asleep. But what if someone has difficulty speaking because of illness or injury? Or what if a noisy manufacturing environment makes it impossible to hear and understand voice commands? How can humans communicate with machines without using their voices? Xiumin Diao, assistant professor of engineering technology, is teaching a robot to know what a human wants without that person saying anything.

“Imagine you and I are interacting with each other and I make a certain gesture,” said Diao, making a “come here” motion with one hand. “You recognize my gesture. You understand it. You know the intention of my actions. You can even predict what will occur next. But for now, even the most advanced robots on the market cannot achieve that level of comprehension. We can talk to the robot, but that’s all we can do.”

In his research, Diao uses action recognition and intention prediction.

“Action recognition has been intensely studied in literature. For my research, I mainly focus on the latter part, intention prediction,” Diao said. “I want the robot to recognize the action. But more than that, I want it to predict the intention behind the action, such that the robot can understand the intention of human beings.”

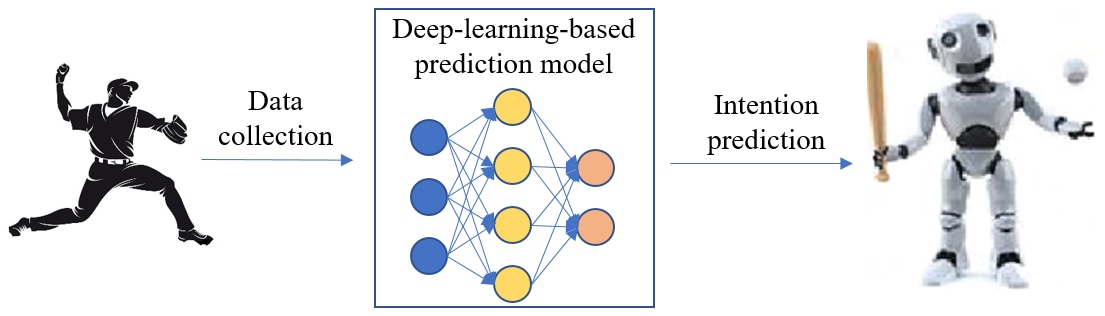

The simple action of throwing a ball toward a camera provided Diao with plenty of research data. As a human repeatedly pitched the ball, a camera recorded his movements and a computer stored the data. Diao collected thousands of trials of human pitching data for training and testing a deep-learning-based model for intention prediction.

The motions involved with each pitch were correlated to the resulting location of each ball thrown. The goal is for the robot to learn and predict where the ball will land (intention prediction) based on the movements of the pitcher (action recognition). The robot can then act accordingly to catch the ball.

Physical therapy was the motivation.

Although ball-throwing provided the analytical data for his research, Diao’s motivation originated in the field of medicine. During physical rehabilitation, if a patient needs assistance to stand up from a chair, a physical therapist needs to apply a certain amount of force and torque to help lift the patient into a standing position.

“But the timing is critical,” explained Diao. “If the patient tries to stand, but the therapist applies that force and torque a second too soon or a second too late, the patient’s experience of standing will not work properly.”

However, a robot specializing in physical rehabilitation could recognize a human’s intention of standing and accurately apply timing, force and torque, resulting in a better outcome for that patient.

“Physical rehabilitation is just one application,” said Diao. “Now that I’ve done this work, I’ve found that it can be applied to many other areas.” As an example, Diao mentioned the potential of a smart treadmill that could recognize and analyze a person’s speed and manner of walking, or their gait.

“Using gait analysis, the treadmill speed adapts to the person as opposed to the person adapting to the treadmill. That’s another way to take advantage of intention prediction.”

Diao’s goal is to teach machines to predict humans’ intentions, so that we can accurately control on-demand devices to apply the correct timing, force and torque.

“I want the robot assistant to do something for me,” he said. “The robot should understand my intention quickly and accurately, so that we can work more closely and collaborate closely. That’s the key.”

Diao’s research team includes Lin Zhang, a postdoctoral research associate, and Shengchao Li, a graduate teaching assistant. Zhang’s earlier research included a case study on human action recognition and intention prediction.