The Neurocars project consists of the implementation of a genetically trained feed forward multi-layer perceptron that learns to drive a physically modeled race car around a race track. The entire project is coded as C# within the Unity3D environment. The race car model and physics were provided by the AnyCar package available on the Unity asset store. It was coded as a proof of concept that a simple ANN could be quickly trained using genetic training to handle a basic game task.

Network Architecture

The neural network is purposefully simple in order to allow for speedy training. It consists of one two-node input layer, two 10-node hidden layers, and a two-node output layer.

The neural network is purposefully simple in order to allow for speedy training. It consists of one two-node input layer, two 10-node hidden layers, and a two-node output layer.

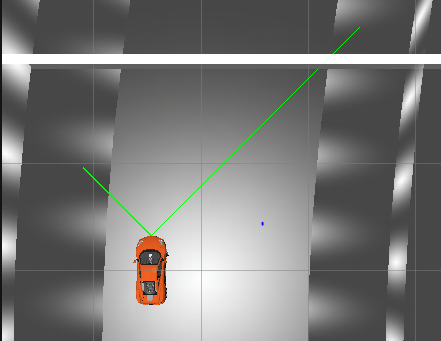

The first input node receives the ratio of the car's current velocity to its maximum velocity, normalized to the range of -1 to +1. The input node is the ratio between two feeler rays cast at +/- 45 degrees from the center of the front of the car.

The output is also two nodes normalized to the same range. The first is the angle of the steering wheel. The second is pressure on the gas pedal if it is positive, and pressure on the break if it is negative. (This folding of two outputs into one matches the player interface to the AnyCar physics engine.) A sigmoid was used as a trigger function, and single bias array was applied across all node calculations.

The genetic algorithm searched for weights and biases between -/+ 1. It used a weighted random selection of parent genomes based on the car scores (see below) . A mutation rate of 0.07 was applied. As an optimization, one breeding pair in each generation was not randomly selected but instead taken from the two highest scoring cars

Training and Test Environment

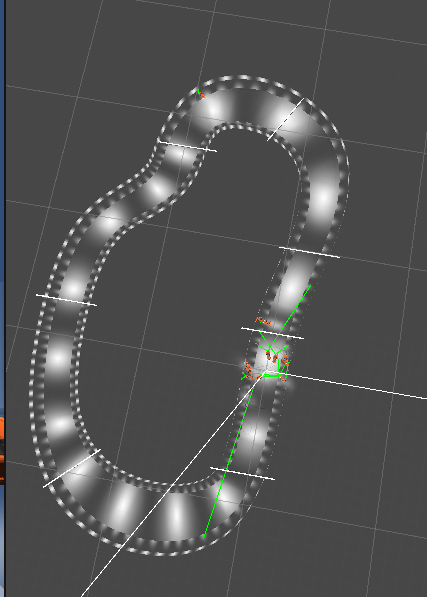

The cars are trained and evaluated on an oval track. The track contains an s-curve approximately half-way around to prevent the solution from devolving into a simple left turn. A race is timed to last 90 seconds or until all cars have been removed from the track. When either condition occurs, new genomes are bred based on the car fitness scores as explained below, and a new generation is tested.

The cars are trained and evaluated on an oval track. The track contains an s-curve approximately half-way around to prevent the solution from devolving into a simple left turn. A race is timed to last 90 seconds or until all cars have been removed from the track. When either condition occurs, new genomes are bred based on the car fitness scores as explained below, and a new generation is tested.

Scoring gates represented by a sequentially numbered cuboid are place periodically across the track. Passing through this cuboid has the following effects on the car:

- If the cuboid's number is greater than that of the last encountered cuboid, 100*<cuboid number> is added to that car's fitness score.

- If the cuboid's number is less than the previously encountered cuboid (with one exception below), the car is assumed to be driving backwards around the track and is removed. The car does remain in the breeding population, with its last calculated score.

- Exception: If the starting cuboid's number is encountered again, then the car is considered to have done a full circuit then a car is awarded a bonus to their fitness equal to 1000+((90-<time it took to complete the track>)*100)

Results

The cars were trained under two different road conditions. High traction and low-traction. Under low-traction conditions the cars learned to use "drift' (sideways skid) to their advantage whereas under high traction conditions they mostly stuck to a lane position. Although the driving styles produced were noticeably different, the scoring results were surprisingly consistent across both.

Within 2 - 3 generations one or more cars would learn to successfully complete the track with a minimal fitness score of 4000. After that, within 4 to 5 generations a high fitness score of about 7400 was reached. After that gains were incremental and much slower to occur.

The initial tests were done without a time-based bonus to the completion score. In these cases, the cars learned to drive very slowly and carefully around the track. After a bonus for time was added, they exhibited the desired behavior of learning to race.

Conclusions

This experiment proved that a very simple and shallow neural network with a genetic training algorithem can quickly and sucessfuly train to a limited task of moderate player difficulty with no example data and only a fitness score to train from. Our experiences with tuning the fitness score show that it is critical that such a score numerically reward the actual desired behavior.

Although much of the focus of recent research has been on deep learning models, these require much more data and time to train. Our results suggest that relatively shallow neural networks can be online trained to perform game tasks and adapt to changing game situations quickly.

Codebase

The code for this experiment is available on github at https://github.com/profK/NeuroCars