Aerial photography has been popular ever since people started taking cameras into the sky, starting in the mid-1800s with hot air balloons and continuing to present day in modern aircraft. The use of aerial imagery from unmanned aerial systems (UAS) is a more recent phenomenon, and it provides important data in situations that are dangerous and/or impractical for ground crews.

Current technology relies largely on people to visually interpret UAS imagery, slowing down the process of determining whether images warrant on-the-ground action. Ziyang Tang, a graduate student in Purdue Polytechnic’s Department of Computer and Information Technology, is studying methods to improve computer interpretation of images.

Detecting irregular objects like wildfires

Detecting irregular objects like wildfires

Collaborating with Xiang Liu, a graduate research assistant, Hanlin Chen, a postdoctoral research assistant, Joseph Hupy, associate professor of aviation technology, and Baijian “Justin” Yang, professor of computer and information technology, Tang noted that recent increases in processing power have made computers better able to detect objects with fixed sizes and/or regular shapes.

“Deep learning has had great success with detecting objects like people and vehicles,” said Tang. “But little has been done to help computers detect objects with amorphous and irregular shapes, such as spot fires.”

In the United States, wildfires have caused thousands of deaths and tens of billions of dollars of damage in recent years. Unmanned aerial systems, commonly known as drones, can be deployed rapidly and into areas where manned aircraft cannot.

If computers could interpret aerial imagery from drones accurately, fire fighters and first responders might be better able to focus their resources.

Speed is another factor, Tang said.

“In an actual wildfire event, there is a distinct level of organized chaos,” he said. “The operator of a UAS platform is often monitoring hundreds of spot fires and having to track multiple fire crews and related equipment on the ground. Because we are fallible and suffer from fatigue and short attention spans, some objects can be overlooked.”

Machines, on the other hand, can work without fatigue. A small fire could potentially be prevented from spreading if machines could recognize it accurately and quickly.

Because no previous work had been done in wildfire detection using deep neural networks, Tang’s research team worked to introduce an algorithm that will assist ground-based crews with identification of fire and other ground-based objects related to fire events. The research focused on two main challenges:

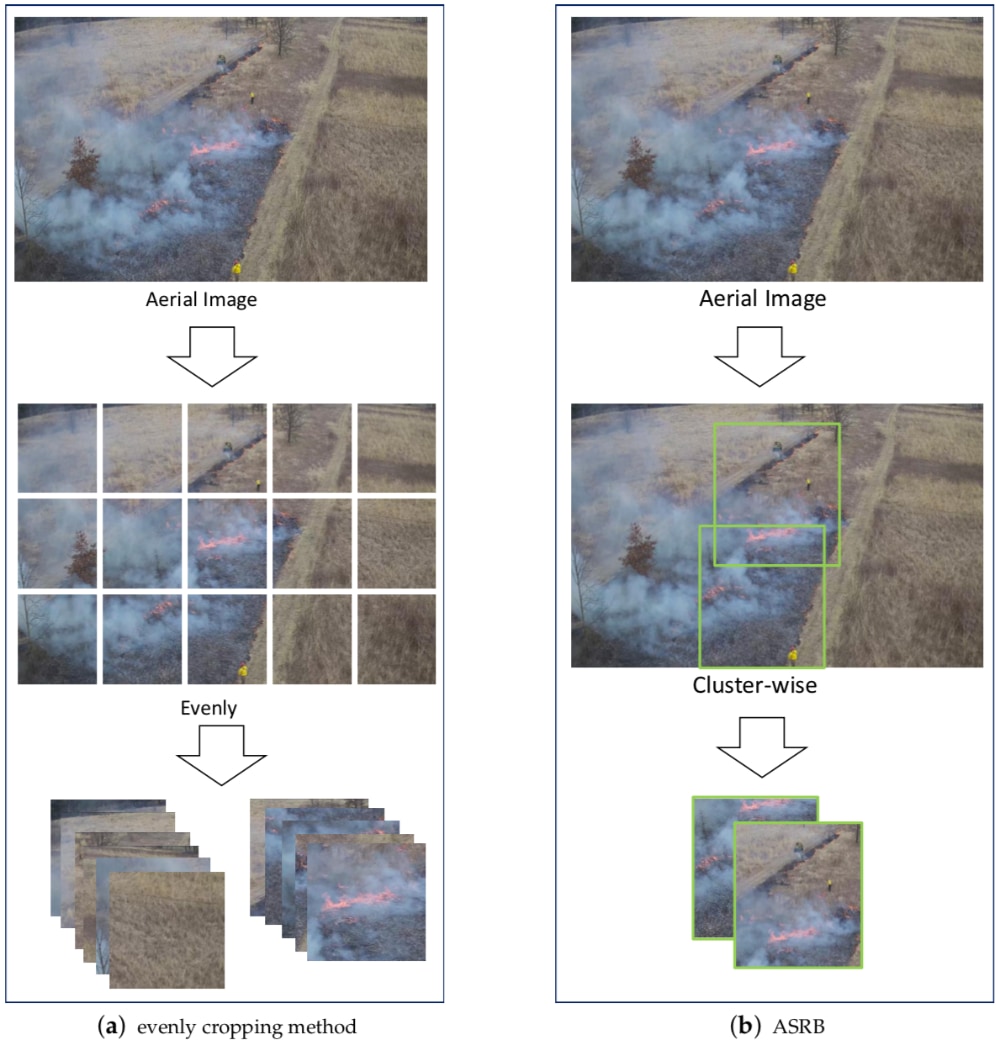

- Existing neural network-based object detection methods provide promising results only from low resolution images, e.g. 600 x 400 pixels — but drone images are usually acquired in high definition, with measurements in the thousands of pixels.

- Existing neural network-based detectors rely on well-annotated datasets — but fire can be amorphous, not able to be annotated with a single rectangle-shaped bounding box. Therefore, it is time-consuming to label fire in high resolution images.

To acquire data for analysis, the researchers examined 4K (3,840 x 2,160 pixel) videos collected via drones during a controlled burn.

“Coarse-to-fine” strategy

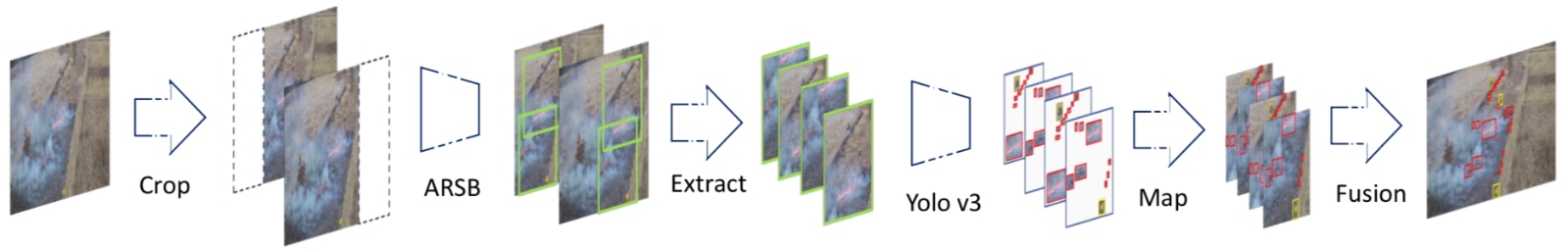

Tang and his research team introduced what they believe is the first public, high resolution wildfire dataset, with annotations containing 1,400 aerial images and 18,449 instances. Each instance represents an object such as fire, trucks or people. They also introduced a “coarse-to-fine” strategy to auto-detect wildfires that are sparse, small and irregularly shaped. The coarse detector adaptively selects subregions in the images likely to contain objects of interest (such as fires) while also passing along only the details of those subregions, rather than the entire 4K region, for further scrutiny.

“After extracting objects from high resolution images, we zoom in to detect the small objects and fuse the final results back into the original images,” said Tang. “Our experiments show that the method can achieve high accuracy while maintaining fast speeds.”

Scientists say that wildfires are caused, in part, by severe heat and drought. These conditions are linked to climate change, so the need for fast and accurate computer analysis of such conditions is ongoing. Tang said that future research projects could expand the size of the UAS wildfire imagery collection. Also, as new UAS platforms equipped with more powerful CPUs and GPUs (the processors in computers responsible for general computations and graphical computations, respectively) become available, their image analysis methods could be further refined.

“Fusing data collected from multiple types of sensors can provide additional wisdom in wildfire fighting scenarios,” said Tang. “A hybrid approach that combines signal processing with deep learning could lead to a faster and more accurate technique to identify both small objects of interests and objects with irregular boundaries in high definition videos and images.”