How can students learn when there aren’t enough teachers, especially in remote locations? Textbooks provide a good start – but it’s easier to learn with the help of a human instructor. When none are available, can computers step in?

Lifelike computer-generated instructors that speak fluently, employ human-like gestures, understand and correctly interpret human speech, and recognize and believably emulate human emotion have been commonplace in science fiction for decades. Two Purdue Polytechnic professors, together with collaborators at the University of California, Santa Barbara, are taking steps toward making lifelike affective educational avatars a reality.

Nicoletta Adamo, professor of computer graphics technology, and Bedrich Benes, George McNelly professor of technology and professor of computer science, are researching the ways in which emotional computer-animated instructors, or agents, affect student learning. Their research also aims to guide the design of affective (that is, emotion-generating) on-screen agents that work well for different types of learners.

Decades of animated research

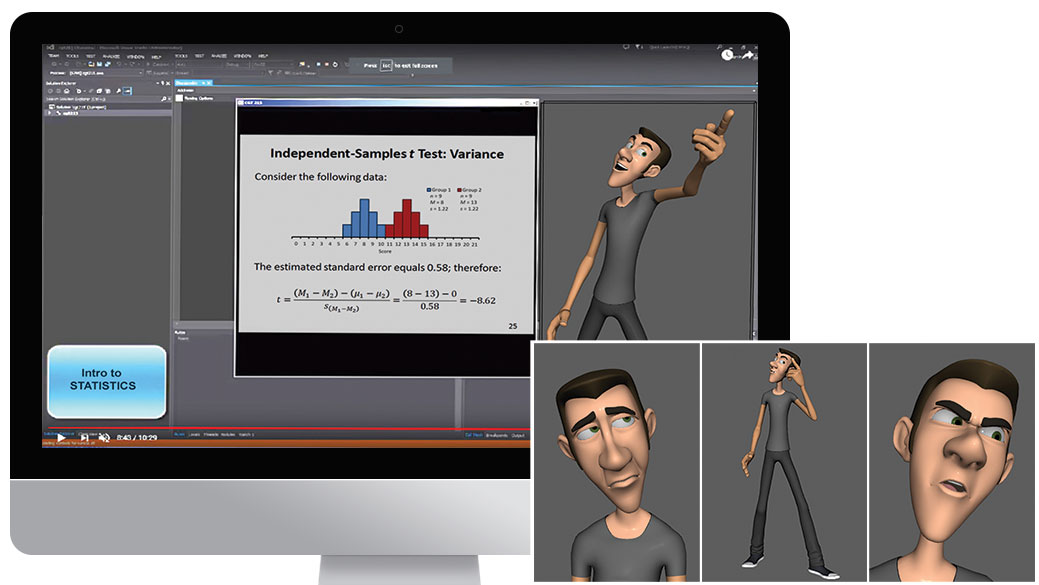

Adamo has been working on character animation for more than 20 years. She has conducted extensive research on teaching avatars, including the use of gesturing avatars to teach math and science via sign language to children who are deaf. Her research also led to the creation of software that enables educators who don’t have programming or animation expertise to generate computer-animated instructors from a script. Adamo’s research showed that young learners, especially those not proficient in reading or fluent in English, found learning easier when the material is presented through speech with context-appropriate gestures.

“Speech analysis research provides some of the rules for how to make a teaching avatar talk and produce certain language-specific gestures,” she said. “My research focused on charisma and personality gestures and how to generate them automatically based on the script.”

For their current research, “Multimodal Affective Pedagogical Agents for Different Types of Learners,” Adamo and Benes are collaborating with Richard E. Mayer, distinguished professor of psychology at the University of California, Santa Barbara. The National Science Foundation-sponsored project represents an integration of several areas of research:

- Computer graphics research on lifelike and believable representation of emotion in embodied agents

- Advanced methods and techniques from artificial intelligence and computer vision for real-time recognition of human emotions

- Cognitive psychology research on how people learn from affective on-screen agents

- Efficacy of affective agents for improving student learning of STEM (science, technology, engineering and math) concepts

Effective affective agents

The team’s new research goes beyond Adamo’s 2017 project, which focused on automating the creation of effective animated gestures for an avatar programmed to teach math concepts.

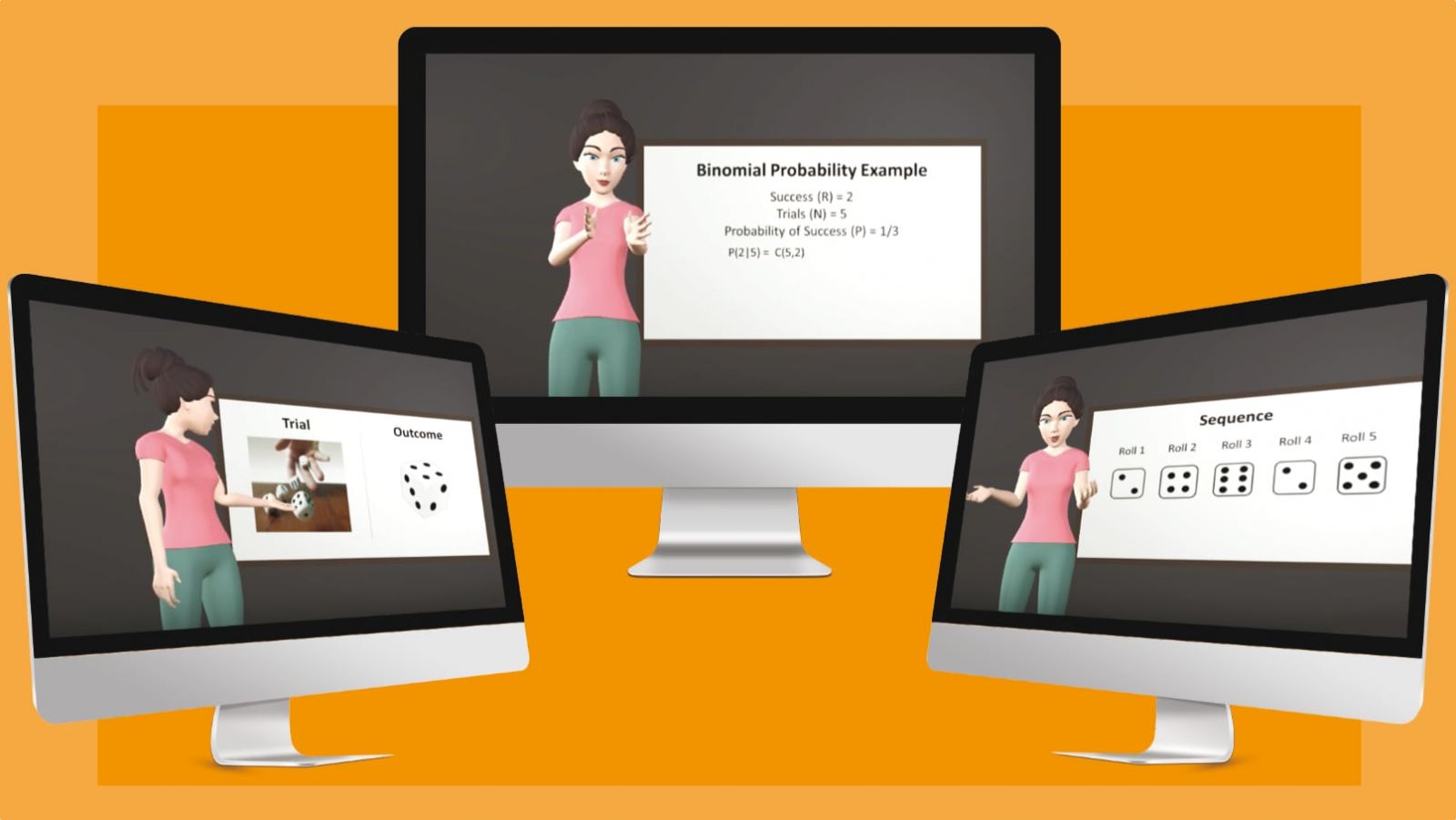

“We’re taking this a step forward,” said Adamo. “We are developing animated avatars that not only teach using speech and gestures but also show emotions and emotional intelligence. They’re called ‘affective agents.’”

They’re studying whether the emotional style of the agent affects student learning. If the agent presents a lesson with excitement, for example, do students remain engaged? If the avatar is dull, do learners get bored?

To gather research data during experiments, the team is working to automate the animation of avatars, making it easier to display different emotional styles.

“We’ve done a little bit so far,” Adamo said. “There’s a script for what the avatar should say in the lecture. We want to develop automation to add emotion to the avatar and give it the ability to display the emotional state through facial expressions and body language.”

The style of those gestures is also important, said Benes. “For example, hand gestures should be faster when showing excitement, and facial expressions should change. We want to encode all this to automatically generate the animation.”

Adapting to real emotion

Another goal, added Benes, is to determine whether the system can recognize the students’ emotions and react to them. To gauge the reaction of test subjects during experiments, Mayer produced two videos of affective agents. In one, the agent is excited. In the other, the agent is dull.

“If you are excited as a learner, what is the best response of the agent to keep you engaged?” asked Benes. “Does the agent need to remain as excited, or temper its emotions?”

On the other hand, if students become inattentive, the agent needs to choose a course of action. The agent might need to shout, attempt to be entertaining, or perhaps even leave the room for a time. It’s challenging to determine which action would be most effective toward learning.

“This has been studied with real teachers,” Adamo said. “It’s an interesting question: How do we transfer that to avatars?”

“It needs to be a bidirectional way of communication,” Benes said. “We’re trying to break the paradigm of one-way interaction. The agent needs to adapt to the learner.”

Benes said they’ve developed their own system that is capable of detecting emotions in real-time for the research, with custom software and a webcam.

“It’s based on a large dataset with a deep neural network that has been trained on existing humans’ emotions, and it can feed data back into the system,” he said. “It’s still under development, but we can recognize seven expressions so far, with differing qualities. The systems are dependent on the training data. It’s not easy to get accurate data on different human emotions. Neutral is actually the hardest to detect.”

The researchers make the assumption that their subjects won’t have a neutral face for long, Adamo said. The system is being designed to recognize emotions in two dimensions, she said: valence, which is negative to positive, and arousal, which is inactive to active.

“The webcam sees the students’ facial expressions and the software tries to recognize their emotions,” said Benes. “Our new system reads facial expressions like confusion, boredom, happiness or excitement” and then reacts accordingly. If the system detects confusion, for example, the agent could slow its pace or offer a more detailed explanation to the learner.

Better animation = better teachers

When considering the emotional representation abilities of affective pedagogical agents, Adamo, Benes and Mayer note that the animation quality of existing agents is low. Highly engaging animated characters that display convincing personality and emotions are seen frequently in entertainment and games, but they haven’t yet had a substantial impact in education.

The team expects to lean on Adamo’s long experience in character animation to improve the design and quality of existing affective agents. They aim to create algorithms that guide the animation of emotion, generating lifelike gestures, speech, body movements and facial expressions from both data-driven and synthesis approaches. An existing database of specific gestures developed during earlier research will be expanded.

They also hope that the project will lead to improvements in the basic visual design of the avatars. What designs maximize learning? Certain visual features could have effects on learning. Agents could be designed to look realistic or like cartoons, for example. Adamo noted that they will also be watching for potential correlation between the agents’ features and student characteristics such as age, ethnicity and level of expertise. In earlier research, she noticed that young learners preferred an avatar that appeared as a casually dressed teenager over another that appeared as a professionally dressed young adult.

The emotional state of the learner and the emotional state displayed by the instructor can also have substantial effects on student learning, according to research. Adamo, Benes and Mayer are examining the instructional effectiveness of affective agents that respond appropriately to learners’ emotions, seeking to build lifelike, multimodal expressive agents with adaptive emotional behaviors.

The research team includes graduate students Justin Cheng and Xingyu Lei, who are working on animation and motion capture. Wenbin Zhou developed the emotion recognition system. Undergraduates Kiana Bowen and Hanna Sherwood worked on character design.

“The ultimate goal is to enable anybody who is not an animator to generate these affective agents and embed them in an online lecture,” said Adamo. “That’s the goal. We’re not there yet.”

But the team does not intend their research to lead to human teachers being replaced.

“We want to help people who might not have access to high quality education,” said Adamo, “but face-to-face interaction with real people remains very important.”