A Multimodal Dataset for Objective Cognitive Workload Assessment on Simultaneous Tasks (MOCAS)

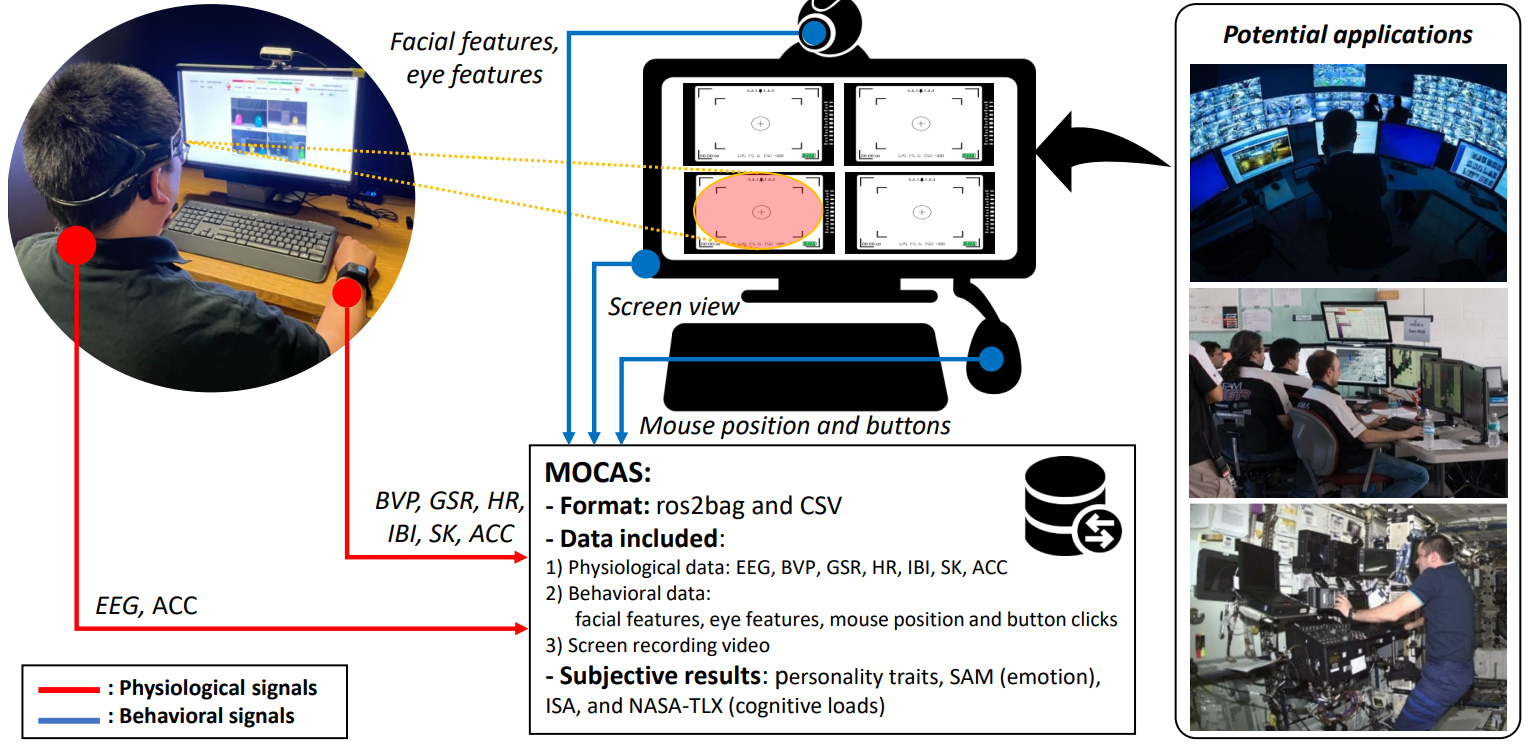

Illustration of the design of MOCAS dataset.

1. Abstract

This MOCAS is a multimodal dataset dedicated for human cognitive workload (CWL) assessment. In contrast to existing datasets based on virtual game stimuli, the data in MOCAS was collected from realistic closed-circuit television (CCTV) monitoring tasks, increasing its applicability for real-world scenarios. To build MOCAS, two off-the-shelf wearable sensors and one webcam were utilized to collect physiological signals and behavioral features from 21 human subjects. After each task, participants reported their CWL by completing the NASA-Task Load Index (NASA-TLX) and Instantaneous Self Assessment (ISA). Personal background (e.g., personality and prior experience) was surveyed using demographic and Big Five Factor personality questionnaires, and two domains of subjective emotion information (i.e., arousal and valence) were obtained from the Self-Assessment Manikin, which could serve as potential indicators for improving CWL recognition performance. Technical validation was conducted to demonstrate that target CWL levels were elicited during simultaneous CCTV monitoring tasks; its results support the high quality of the collected multimodal signals.

2. Dataset summary

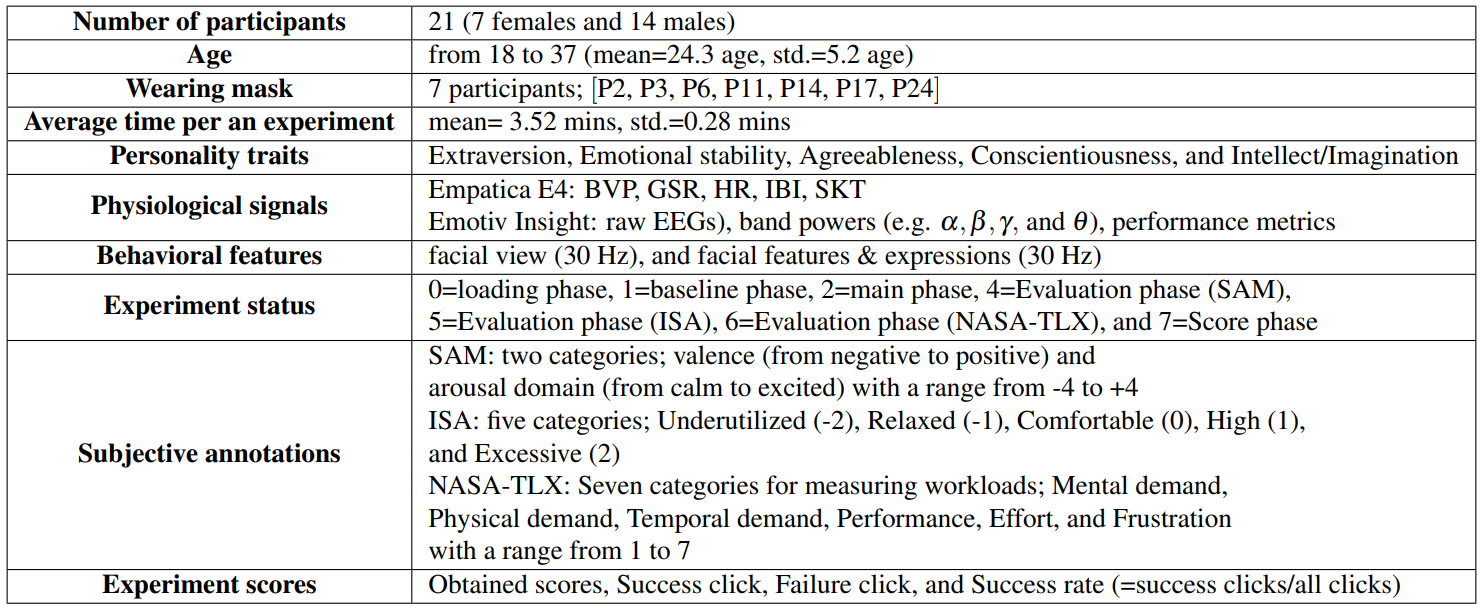

Table 1. Summary of the MOCAS dataset contents

Table 1 summaries the MOCAS, which contains multimodal data from 21 participants, including physiological signals, facial camera videos, mouse movement, screen record videos, and subjective questionnaires. The total size of the dataset is about 722.4 GB that includes 754 ROSbag2 files.

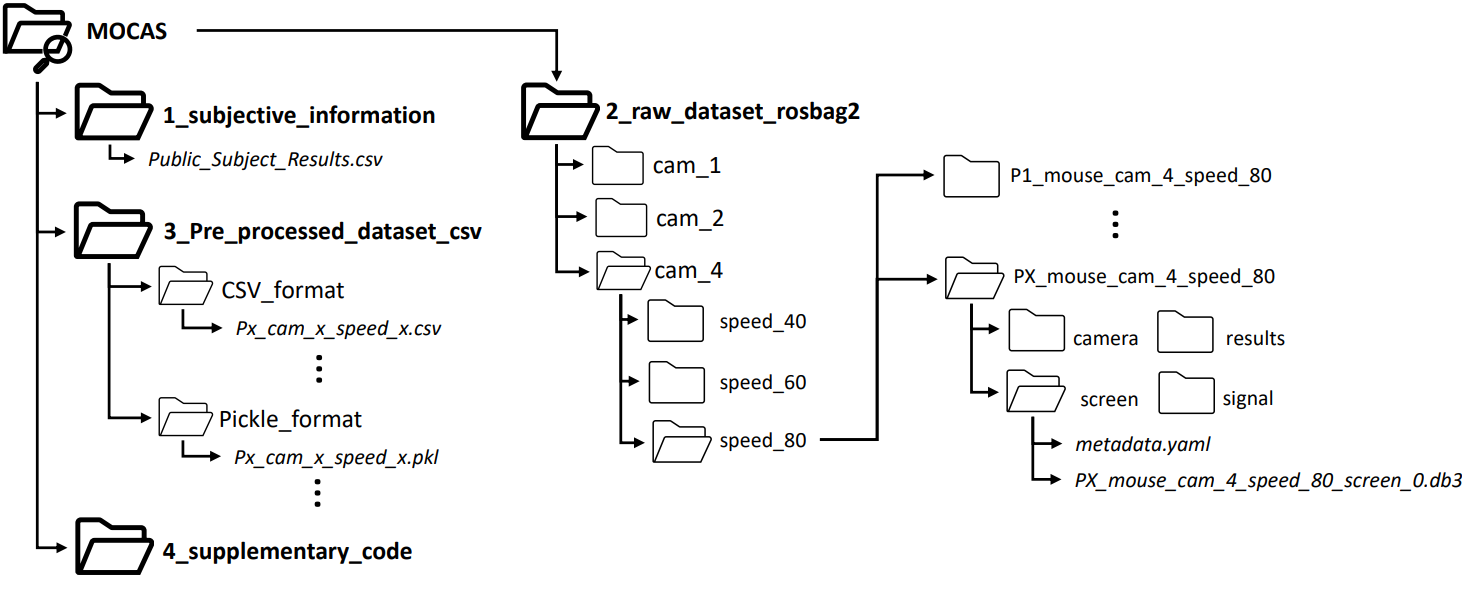

A folder tree of the online repository that displays directory paths and files for the MOCAS dataset.

- 1_subjective_information: a single csv file containing subject's demographic (e.g., age, gender, personality trait, and experience), answers of the subjective questionnaires used in this experience, and performance (e.g., scores, success click, failure click, and success rate).

- 2_raw_dataset_rosbag2: raw rosbag2 files saved into each sub-folder depending on the experimental factors (e.g., robot speed and the number of camera views) and data types (e.g., camera, signal, results, and screen). The last sub-folder of this major folder has two files: metadata.yaml and *.db3.

- 3_downsampled_dataset: downsampled CSV-format and Pickle-format \cite{} files with 100 Hz sampling rate. The overall size of the CSV files and Pickle file is 51.9 GB and 32.8 GB, respectively. Each file has the annotations of the subjective questionnaires, the number of camera view, robot speeds, features of the physiological and behavioral data, and filtered physiological signals.

- 4_supplementary_code: contains the converter codes from raw ROSbag2-format into pickle and csv-format files, and supplementary codes used on this paper. The codes are written by Python under Ubuntu 20.04.

3. Dataset description of the downsampled dataset

The raw ROSbag2 format files were converted to Pickel and CSV format files, which were saved to two different folders based on the type of file format (i.e., CSV and Pickle). Each file contains raw signals, each channel's EEG signal divided from chunk EEG signals, and behavioral features from facial videos, e.g., Action units (AUs), probability and types of facial expression, and Eye Aspect Ratio (EAR).

4. How to access the MOCAS

Only authorized researchers who consent to the End User License Agreement (EULA) are allowed to download the MOCAS. The researchers who want to access the MOCAS should download the EULA document (Click here: Download MOCAS's EULA).

After reviewing and filling the document up, they should send it to info@smart-laboratory.org, include their Zenodo account information used to access the dataset. Then, our research group will invite them to our online dataset repository having the MOCAS dataset and example codes used in this paper.

- (temporary, 10/6/2022) Raw dataset (ROSbag2 formant, 760GB):

https://purdue.box.com/v/mocas-dataset

- (temporary, 10/6/2022) Downsampled (CSV and Pickle formats, 19 GB):

https://zenodo.org/record/7023242

If you have any problems, please contact us via info@smart-laboratory.org.

5. Acknowledgment

This material is based upon work supported by the National Science Foundation under Grant No. IIS-1846221. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.